With the use of large language models (LMMs) lurks a pressing question: are these tools truly empowering us, or quietly eroding our capacity for independent thought and critical reflection? As Zimbabwe’s digital landscape expands, this debate no longer belongs in ivory tower seminars alone; it affects every home, classroom and boardroom.

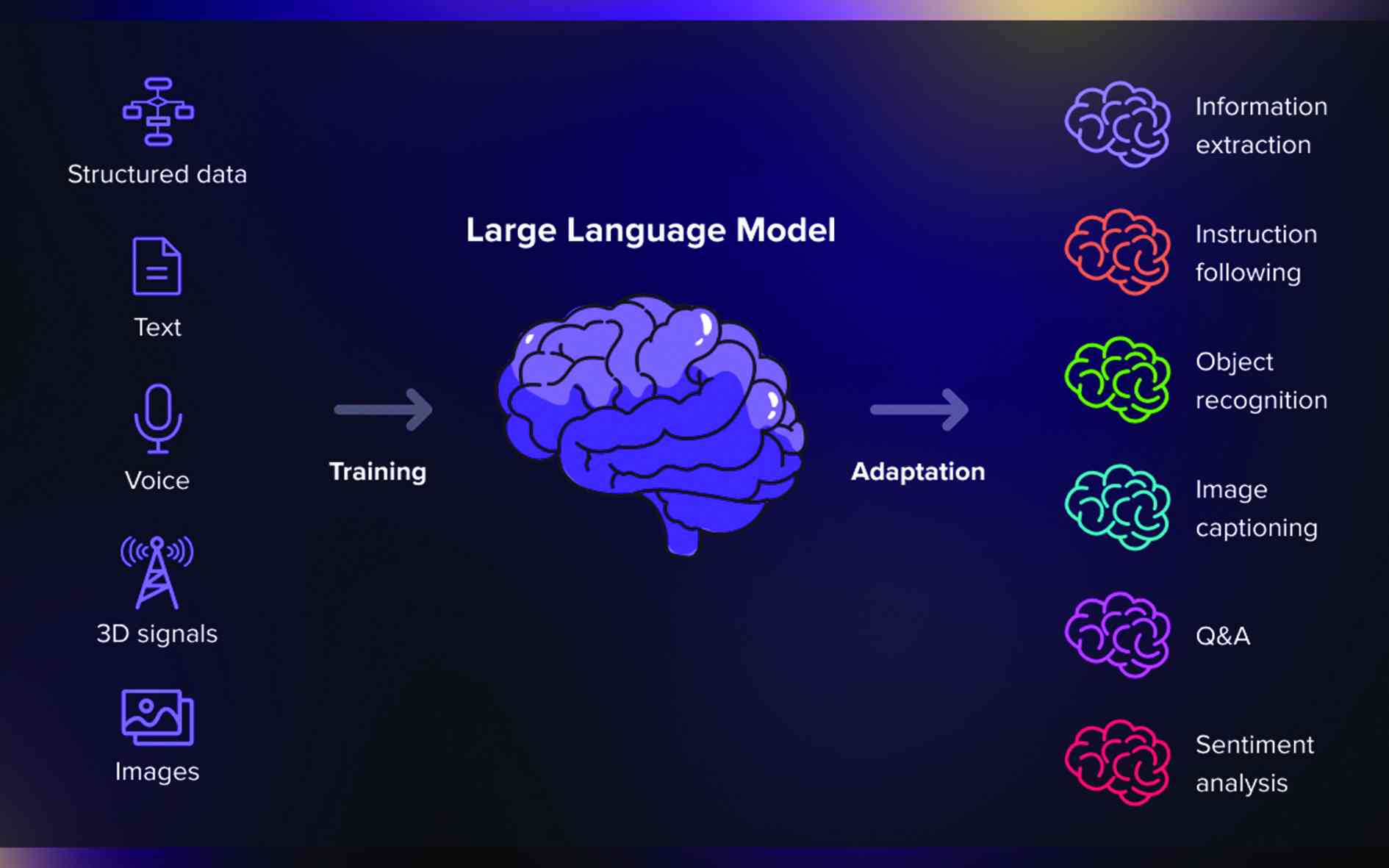

At its best, an LLM can summarise a dense legal statute, suggest improvements to a business proposal or even translate provincial news articles into fluent English or Shona.

Many of us have felt the relief of offloading mundane writing tasks to a machine, freeing up time for other priorities. But efficiency is never neutral. It embodies choices about what work we value and what effort we are willing to relinquish.

If “faster” replaces “better thought-through”, we risk sacrificing the very processes that nurture insight, innovation and sound judgement.

Researchers in the United States have warned of this pitfall. A pioneering MIT study found that participants who drafted essays using ChatGPT not only engaged less critically with the content they produced, but also demonstrated weaker activation in brain regions linked to analysis and memory.

In contrast, those who wrote without AI assistance formed stronger neural connections and reported greater satisfaction with their work. In plain terms, every shortcut we take with AI can chip away at the mental muscle we need to learn, remember and reflect.

There is also the growing danger of AI-driven echo chambers. For many Zimbabweans, social media timelines are already curated; algorithms decide which political debates, church events or farming tips appear first.

When LLMs enter the fray, offering “top answers” to complex questions, they risk reinforcing the same filter bubbles. We see this phenomenon in synthetic news stories, where politically charged imagery and engineered commentary swirl to create false consensus. It feels as though “everyone” supports a certain view when, in fact, machine generated outrage and praise are driving the narrative for commercial gain.

- Time running out for SA-based Zimbos

- Sally Mugabe renal unit disappears

- Epworth eyes town status

- Commodity price boom buoys GB

Keep Reading

Our democratic processes are particularly vulnerable. In a country where civic engagement remains essential for meaningful reform, citizens must evaluate claims, question motives, and weigh evidence.

If an AI-crafted social media post arrives with emotive language, expertly tuned for virality, many of us will react instantly, sharing, liking or commenting, without pausing to verify its source. Over time, this erodes the very foundation of informed debate. We move from thoughtful deliberation to knee jerk responses, manipulated by invisible algorithms rather than genuine persuasion.

The convenience narrative around AI often asserts that these tools “free up cognitive bandwidth,” allowing us to dedicate our minds to higher order tasks. Yet the opposite can occur.

When routine summarising or drafting is outsourced entirely, we miss the opportunity to wrestle with ideas, to refine arguments and to learn from mistakes.

Imagine a student in Gweru who relies on AI to compose an assignment. She may achieve a respectable grade, but she forfeits the process of critical engagement that transforms knowledge into understanding.

The craft of writing, choosing the right words, constructing logical flows, and wrestling with ambiguity, cannot be automated without collateral damage.

Consider our education system, already oriented around high stakes examinations. In Form Four and Form Six, performance is paramount. If students discover an AI can generate an excellent essay in minutes, the temptation to use it is undeniable.

Teachers may be pressured to accept polished assignments, and examiners might be none the wiser. Yet such expedience only produces hollow achievements.

When these same students enter university or the workforce, the gaps in their reasoning and analytical skills will become painfully apparent, whether drafting policy papers in government ministries or analysing financial projections in private firms.

In Zimbabwe’s rural areas, where internet connectivity remains patchy, some communities have welcomed digital literacy workshops that introduce basic computer skills. Soon, these trainings will include AI tools. Here lies an opportunity: to instil healthy scepticism alongside technical know — how.

If villagers learn that AI suggestions must be corroborated by consulting agricultural extension officers or local experts, they avoid the trap of blind reliance. Grounding AI in human oversight ensures technology serves local knowledge, rather than supplanting it.

So, how should Zimbabweans navigate this new reality? We must reclaim the value of deliberate effort. In schools and workplaces, assignments and projects should emphasise process as much as the end product. Students might submit annotated outlines and draft versions, alongside final essays.

Business proposals could require a “reflection log”, where authors explain how they structured their arguments, cite their sources and critique their work. By making the thinking visible, we discourage mindless AI usage and celebrate intellectual craftsmanship.

We must recover a cultural appreciation for uncertainty and contradiction. Critical thinking thrives on grappling with ambiguity, weighing opposing viewpoints and revising one’s beliefs. In conversations, in classrooms, boardrooms and beer halls alike, we should celebrate questions more than answers.

A society that prizes speedy resolutions above nuanced debate sows the seeds of intellectual atrophy. By reinforcing that doubt is a sign of engagement, not of weakness, we nurture resilient minds capable of confronting tomorrow’s challenges.

This is not a call to shun AI; that would be both futile and misguided. Generative models offer tremendous benefits in healthcare diagnostics, in flood risk modelling and in preserving endangered languages. But each deployment must be preceded by a simple question: why use AI here? If the task involves rote transformation of text or data, AI can be leveraged responsibly. If the objective demands deep contextual insight, such as drafting land reform policy or analysing economic indicators, human judgment must lead.

Efficiency, in its truest sense, is not merely doing things faster. It is about doing them better: with depth, coherence and ethical intent. When Zimbabweans confront the next wave of digital disruption, from AI-powered translation services to fully-automated customer care bots, we must insist on embedding human oversight at every stage.

Sagomba is a chartered marketer, policy researcher, AI governance and policy consultant, ethics of war and peace research consultant. — [email protected]; LinkedIn: @Dr. Evans Sagomba; X: @esagomba.